This year's TechFest has brought together researchers from around the globe to present, experience and discuss some of the emerging technologies from Microsoft's Research wing. Highlights include a mobile version of the company's Surface platform, a voice recognition transcription system with auto-translate and a couple of projects which use the body as a computer interface.

The Translating Telephone

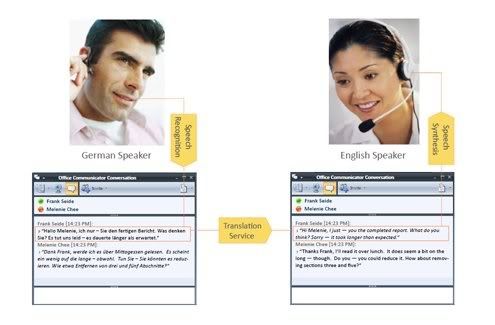

Transcriptor! is a research pilot using Microsoft Communicator which automatically reproduces a written version of a voice call conversation on-the-fly using speech recognition technology. Integration with emails would allow useful search and recall possibilities and would no doubt find immediate application in video-conferencing and virtual meeting scenarios. According to the research proposal, "Transcriptor! requires the use of a headset, and a powerful computer, and for now, an American accent." When combined with a real-time translation tool, the game steps up a notch. As well as seeing translated scripts displayed as the conversation takes place, synthesized audio in a receiver's native language would help ensure that important details are not missed. Native profiling can be undertaken by the system to help achieve better transcription and translation accuracy. Researchers believe that a very high rate of transcription accuracy could be achievable within the next five years if factors such as user location identification, the use of high quality uncompressed audio and powerful computer systems are all possible. Happily, VoIP (Voice over Internet Protocol) technology already provides a suitable framework for such developments which could see Transcriptor! and Translator! achieve the Holy Grail of more than 90 percent accuracy and bring the fictional universal translator within reach. A project overview is available here. Mobile Surface Unless you've been living under a rock for the past few years you'll already be familiar with and probably impressed by Microsoft's Surface. Researchers from Microsoft Asia have taken this to a new level by taking the gesture-driven, touch-activated experience and interaction offered by Surface to a more mobile environment. The prototype involves using a mobile device hooked up to a camera-projector system which sends an image onto a surface and then interprets user interactions with the displayed image. The system translates both physical interaction with the surface of the image and 3-D spatial movements, too. The researchers have also looked at using augmented reality and multiple-layer 3-D information visualization where they demonstrated viewing a map that gave varying information, depending on where the viewing card was placed. Visit the project overview for more information.

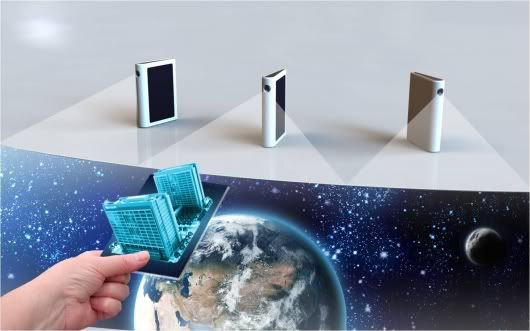

Mobile Surface Unless you've been living under a rock for the past few years you'll already be familiar with and probably impressed by Microsoft's Surface. Researchers from Microsoft Asia have taken this to a new level by taking the gesture-driven, touch-activated experience and interaction offered by Surface to a more mobile environment. The prototype involves using a mobile device hooked up to a camera-projector system which sends an image onto a surface and then interprets user interactions with the displayed image. The system translates both physical interaction with the surface of the image and 3-D spatial movements, too. The researchers have also looked at using augmented reality and multiple-layer 3-D information visualization where they demonstrated viewing a map that gave varying information, depending on where the viewing card was placed. Visit the project overview for more information.

Muscle control and surface tension Technologies that utilize the human body as a computer interface also featured at this year's TechFest. One team has come up with a system where electromyography (EMG) is used to decode muscle signals and then translate them into computer or other device commands. As well as providing an overview of the project, this videoshows one of the researchers playing an air guitar version of the Guitar Hero video game. In the research presented, members of the team were physically connected to the computer or device but a wireless EMG system has been created which should open up the door to truly mobile, muscle-controlled device interactions. Two researchers from the Muscle Computer Interface team have also been working with Chris Harrison from Carnegie Mellon University to create Skinput, which allows the surface of the skin to become an input device. Different parts of the body produce varying sounds when tapped and capturing, identifying and processing these different sounds can result in a system where the whole body could become an acoustic controller. Imagine what Bobby McFerrin could do with such a system! The team restricted their project to the arm and hands and used a pico projector to generate complicated graphical scrolling menu systems which were controlled by tapping different parts of the arm.This video overview shows a researcher playing a game of Tetris projected onto a hand and controlling the orientation of falling blocks by tapping the ends of fingers. Like the muscle controller, possible future applications include media devices, mobile phones and messaging systems as well as the potential of having something like a car or garage door remote literally at the end of your fingertips. Other projects presented at TechFest 2010 included an immersive digital painting prototype and photo manipulation and creation innovations.

Sunday, 7 December 2014

Microsoft shows off the future at TechFest 2010

Labels:

Auto,

Cars News,

FUTURE CARS,

Top - Supar

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment